In this tutorial, we will build a Smart Investment Portfolio Advisor application using Java, Spring Boot, LangChain4j, and OpenAI/Ollama. The application will provide investment advice based on the latest stock prices, company information, and financial results. By the end of this guide, you will learn how to build AI-powered applications that can analyze real-time data and provide valuable insights to users.

Our application will leverage:

Go to Spring Initializr or an IDE of your choice to create a new Spring Boot project with the following dependencies:

Maven Dependencies (pom.xml)

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-jdbc</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <optional>true</optional> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-docker-compose</artifactId> <scope>runtime</scope> <optional>true</optional> </dependency> <dependency> <groupId>org.postgresql</groupId> <artifactId>postgresql</artifactId> <scope>runtime</scope> </dependency>

Now Add the base dependency for LangChain4j for Spring Boot. Refer to the LangChain4j Spring Integration Documentation for more details.

<dependency> <groupId>dev.langchain4j</groupId> <artifactId>langchain4j-spring-boot-starter</artifactId> <version>0.35.0</version> </dependency>

Now depending on the LLM you want to use (OpenAI/Ollama), you can add the following dependency:

For OpenAI

<dependency> <groupId>dev.langchain4j</groupId> <artifactId>langchain4j-open-ai-spring-boot-starter</artifactId> <version>0.35.0</version> </dependency>

For Ollama

<dependency> <groupId>dev.langchain4j</groupId> <artifactId>langchain4j-ollama-spring-boot-starter</artifactId> <version>0.35.0</version> </dependency>

For any other LLM, you can add the corresponding dependency. Refer to the LangChain4j Language Models for more details.

We will use Docker Compose to run PostgreSQL and pgAdmin. Since we added docker compose dependency, Spring Boot will automatically create a docker-compose.yml file in the root directory.

But we will change it like below to expose the database port and add pgAdmin.

services: postgres: image: 'postgres:latest' environment: - 'POSTGRES_DB=stock-advisor-db' - 'POSTGRES_PASSWORD=secret' - 'POSTGRES_USER=stock-advisor-user' ports: - '5432:5432' restart: unless-stopped pgadmin: image: dpage/pgadmin4 environment: PGADMIN_DEFAULT_EMAIL: admin@example.com PGADMIN_DEFAULT_PASSWORD: admin ports: - "8081:80" depends_on: - postgres restart: unless-stopped

Now run the following command to start the database and pgAdmin. Or you can start it from the IDE. When you run the spring boot application, it will automatically start the containers.

docker-compose up

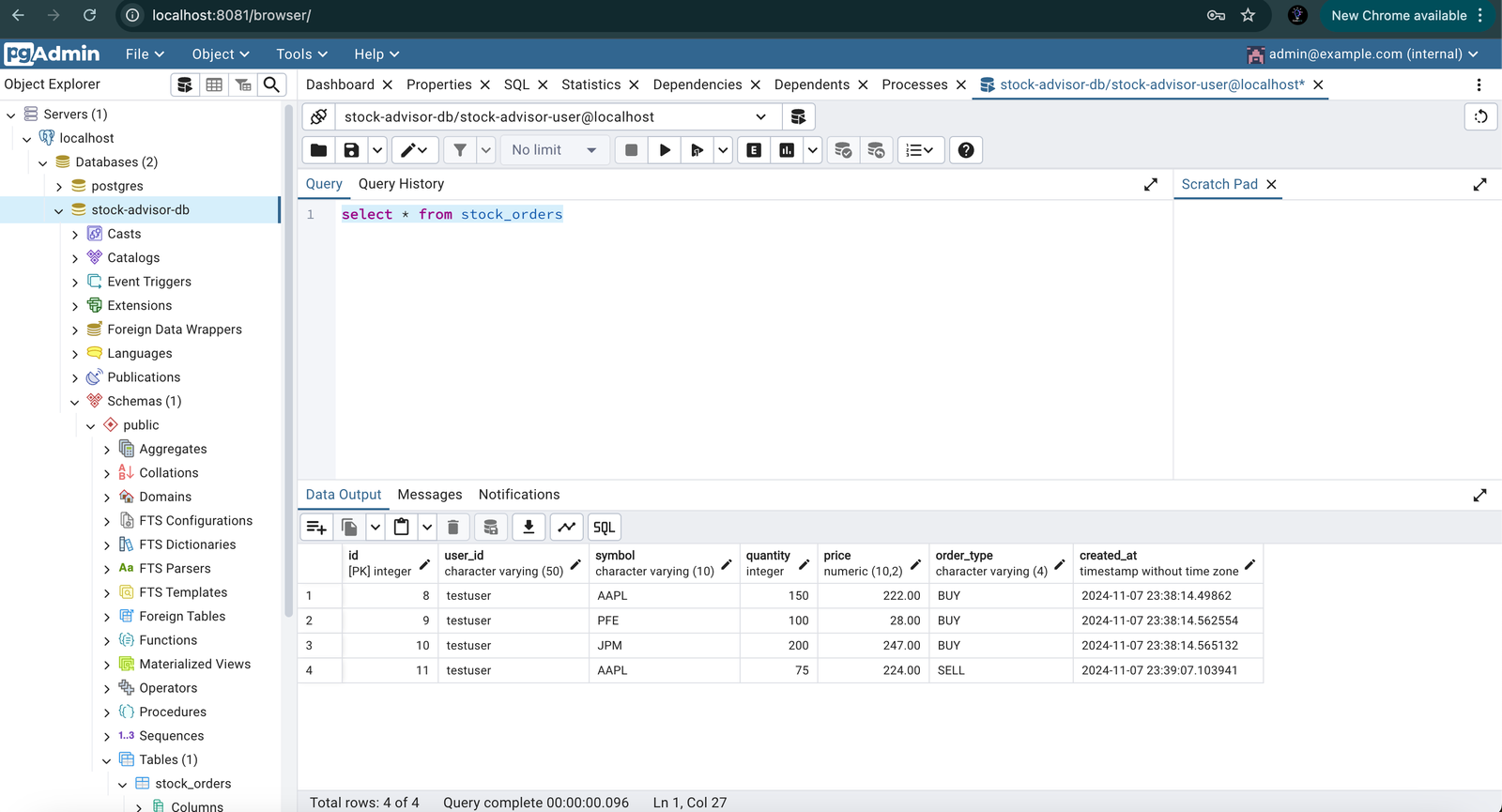

We need to create the below table in the database to store the stock orders. Create schema.sql file in the resources folder and add the following SQL. Spring Boot will automatically create the table when the application starts.

CREATE TABLE IF NOT EXISTS stock_orders ( id SERIAL PRIMARY KEY, user_id VARCHAR(50) NOT NULL, symbol VARCHAR(10) NOT NULL, quantity INTEGER NOT NULL, price DECIMAL(10,2) NOT NULL, order_type VARCHAR(4) NOT NULL CHECK (order_type IN ('BUY', 'SELL')), created_at TIMESTAMP NOT NULL );

Let us create a record for Stock Order mapped to the stock_orders table.

@Table("stock_orders") public record StockOrder( @Id Long id, @Column("user_id") String userId, String symbol, Integer quantity, BigDecimal price, @Column("order_type") OrderType orderType, LocalDateTime createdAt ) {} public enum OrderType { BUY, SELL }

We will also create a record for Stock Position to display the current stock position for each share.

public record StockHoldingDetails( String stockSymbol, double quantity) { }

@Repository public interface StockOrderRepository extends ListCrudRepository<StockOrder, Long> { }

@Service @AllArgsConstructor public class StockOrderService { private final StockOrderRepository stockOrderRepository; public StockOrder createOrder(StockOrder order) { StockOrder newOrder = new StockOrder( null, "testuser", order.symbol(), order.quantity(), order.price(), order.orderType(), LocalDateTime.now() ); return stockOrderRepository.save(newOrder); } public List<StockOrder> getAllOrders() { return stockOrderRepository.findAll(); } public List<StockHoldingDetails> getStockHoldingDetails() { return stockOrderRepository.findAll().stream() .collect(Collectors.groupingBy(StockOrder::symbol, Collectors.summingDouble(order -> order.orderType() == OrderType.BUY ? order.quantity() : -order.quantity()))) .entrySet().stream() .map(entry -> new StockHoldingDetails(entry.getKey(), entry.getValue())) .collect(Collectors.toList()); } }

We will hard code the user_id for now. In a real-world application, you would authenticate the user and get the user_id from the authentication token.

getStockHoldingDetails method will return the current stock position for each share. It groups the orders by symbol and calculates the total quantity for each share using streams.

@RestController @AllArgsConstructor public class StockAdvisorController { @GetMapping("/chat") public String chat(String userMessage) { // Call the AI model to generate a response } }

There are a couple of ways to integrate with LLM using LangChain4j.

ChatLanguageModel low level API which offers most power and flexibility. This is similar to the ChatClient in Spring AI.We will use the high-level API for simplicity. First, we need to define an interface that represents the AI model and annotate it with @AiService.

@AiService public interface StockAdvisorAssistant { @SystemMessage(""" You are a polite stock advisor assistant who provides investment advice. """) String chat(String userMessage) ; }

userMessage is the input message from the user and this will get sent to the AI model with role as user. The response from the AI model will be returned to the user.

@SystemMessage will get send to the AI model with role as system. This usually provides instructions about the LLM's role, behavior, and response style within the conversation. Since LLMs prioritize SystemMessages, it's important to prevent users from injecting content into them. These messages are usually placed at the beginning of the conversation.

Say if user message is "What is the stock price of Apple?", API call made to the AI model will have a part of request body like below.

messages:[ { "role": "system", "content": "You are a polite stock advisor assistant who provides investment advice." }, { "role": "user", "content": "What is the stock price of Apple?" } ]

Now let us inject the AI model into the controller and call the chat method.

@RestController @AllArgsConstructor public class StockAdvisorController { private final StockAdvisorAssistant stockAdvisorAssistant; @GetMapping("/chat") public String chat(String userMessage) { return stockAdvisorAssistant.chat(userMessage); } }

Now let us add some configuration to the application.properties file to configure the AI model.

# Ollama Configuration langchain4j.ollama.chat-model.base-url=http://localhost:11434 langchain4j.ollama.chat-model.model-name=llama3.1:8b langchain4j.ollama.chat-model.temperature=0.8 langchain4j.ollama.chat-model.timeout=PT60S langchain4j.ollama.chat-model.log-requests = true langchain4j.ollama.chat-model.log-responses = true # OpenAI Configuration. SPRING_AI_OPENAI_API_KEY set as environment variable langchain4j.open-ai.chat-model.api-key=${SPRING_AI_OPENAI_API_KEY} langchain4j.open-ai.chat-model.model-name=gpt-4o langchain4j.open-ai.chat-model.log-requests=true langchain4j.open-ai.chat-model.log-responses=true # Logging Configuration logging.level.dev.langchain4j = DEBUG logging.level.dev.ai4j.openai4j = DEBUG

Now let us test our end point by sending a message to the /chat endpoint.

curl -X GET "http://localhost:8080/chat?userMessage=Who%20are%20you%3F"

Response will be something like below.

"I am a virtual assistant programmed to provide financial advice and stock market insights. My purpose is to assist you with information on stocks, including prices, company profiles, and financial statements, and to help you with stock orders. If there's anything specific you need help with, feel free to ask!"

LLMs doesn't have keep any history or memory. If we want LLM to remember previous interactions, we need to pass the relevant data from previous interactions in the current interaction. For this langchain4j provides ChatMemory abstraction along with multiple out-of-the-box implementations. We will configure a bean MessageWindowChatMemory which stores the last n messages in memory. If you restart the application, the memory will be lost.

@Configuration public class AssistantConfiguration { @Bean ChatMemory chatMemory() { return MessageWindowChatMemory.withMaxMessages(20); } }

If you want to persist the chat memory, you can implement your own ChatMemory by implementing the ChatMemoryStore interface from langchain4j.

public interface ChatMemoryStore { List<ChatMessage> getMessages(Object var1); void updateMessages(Object var1, List<ChatMessage> var2); void deleteMessages(Object var1); }

LLMs have a training cut off date which is usually 1-2 years old. So, they will not have the latest information about the companies. We can provide the LLM with tools to access the latest company information from the Financial Modeling Prep API.

Let us add a service to fetch some important details about the companies from Financial Modeling Prep API.

We will use RestClient which came in Spring 6 for that. You can watch this video to know more about RestClient.

Let us initialize RestClient in a configuration class. You can get an API key from here and set as an environment variable with the key stock.api.key.

@Configuration public class StockAPIConfig { @Bean public RestClient getRestClient() { return RestClient.builder() .baseUrl("https://financialmodelingprep.com/api/v3") .build(); } private @Value("${stock.api.key}") String apiKey; public String getApiKey() { return apiKey; } }

Now let us create a service to fetch the company details.

We will be calling 5 different endpoints to fetch the company details.

/quote/{stockSymbols} - To get the stock price (Accepts multiple stock symbols separated by ,)/profile/{stockSymbols} - To get the company profile(Accepts multiple stock symbols separated by ,)/balance-sheet-statement/{stockSymbols} - To get the balance sheet statements (Needs to be called for each stock symbol separately)/income-statement/{stockSymbols} - To get the income statements(Needs to be called for each stock symbol separately)/cash-flow-statement/{stockSymbols} - To get the cash flow statements(Needs to be called for each stock symbol separately)@Service @AllArgsConstructor @Slf4j public class StockInformationService { private final StockAPIConfig stockAPIConfig; private final RestClient restClient; @Tool("Returns the stock price for the given stock symbols") public String getStockPrice(@P("Stock symbols separated by ,") String stockSymbols) { log.info("Fetching stock price for stock symbols: {}", stockSymbols); return fetchData("/quote/" + stockSymbols); } @Tool("Returns the company profile for the given stock symbols") public String getCompanyProfile(@P("Stock symbols separated by ,") String stockSymbols) { log.info("Fetching company profile for stock symbols: {}", stockSymbols); return fetchData("/profile/" + stockSymbols); } @Tool("Returns the balance sheet statements for the given stock symbols") public List<String> getBalanceSheetStatements(@P("Stock symbols separated by ,") String stockSymbols) { log.info("Fetching balance sheet statements for stock symbols: {}", stockSymbols); return fetchDataForMultipleSymbols(stockSymbols, "/balance-sheet-statement/"); } @Tool("Returns the income statements for the given stock symbols") public List<String> getIncomeStatements(@P("Stock symbols separated by ,") String stockSymbols) { log.info("Fetching income statements for stock symbols: {}", stockSymbols); return fetchDataForMultipleSymbols(stockSymbols, "/income-statement/"); } @Tool("Returns the cash flow statements for the given stock symbols") public List<String> getCashFlowStatements(@P("Stock symbols separated by ,") String stockSymbols) { log.info("Fetching cash flow statements for stock symbols: {}", stockSymbols); return fetchDataForMultipleSymbols(stockSymbols, "/cash-flow-statement/"); } private List<String> fetchDataForMultipleSymbols(String stockSymbols, String s) { List<String> data = new ArrayList<>(); for (String symbol : stockSymbols.split(",")) { String response = fetchData(s + symbol); data.add(response); } return data; } private String fetchData(String s) { return restClient.get() .uri(s + "?apikey=" + stockAPIConfig.getApiKey()) .retrieve() .body(String.class) .replaceAll("\\s+", " ").trim(); } }

@Tool annotation is the high level tool API annotation in langchain4j. It is used to define a method as a tool that can be accessed by the AI model. AI Service will automatically convert such methods into ToolSpecifications and include them in the request for each interaction with the LLM. When the LLM decides to call the tool, the AI Service will automatically execute the appropriate method, and the return value of the method (if any) will be sent back to the LLM.

You can give an optional name of the tool/function with @Tool annotation. If name

is not provided, the method name will be used as the tool name.

Method parameters can optionally be annotated with @P annotation to provide a description of the parameter.

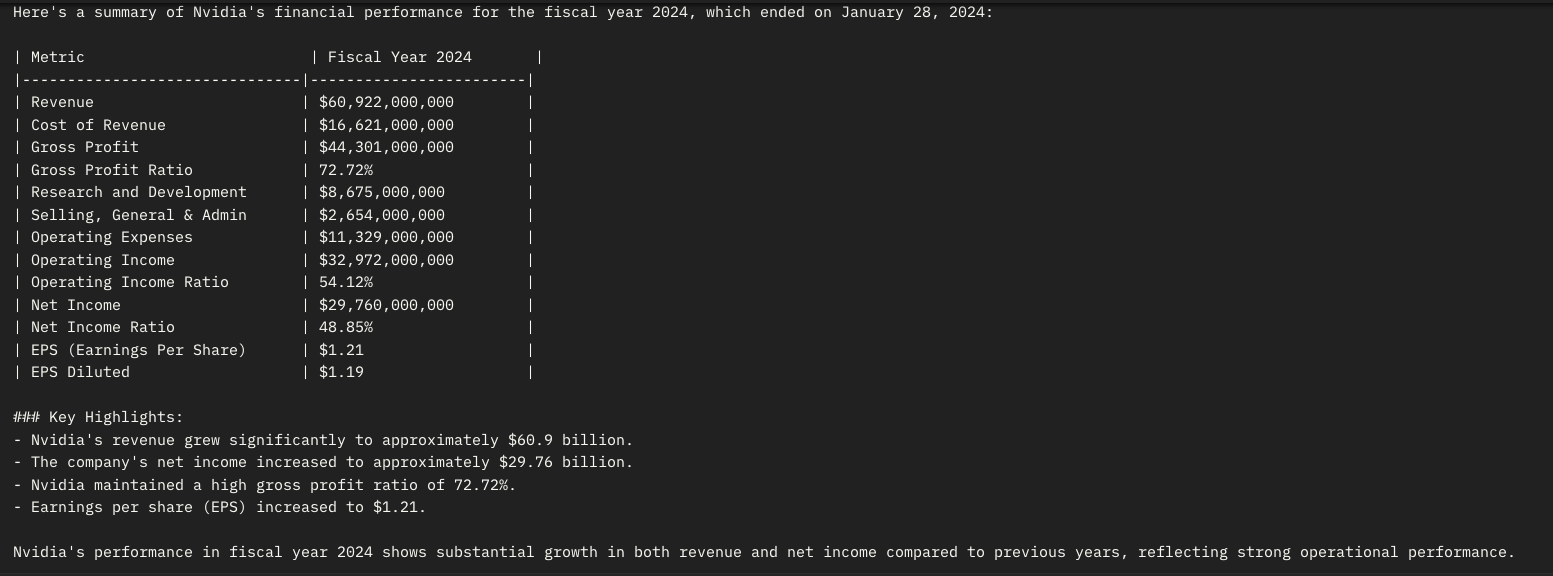

Let us call the chat endpoint with a message "How was the performance of Nvidia this year"

curl -X GET "http://localhost:8080/chat?userMessage=How%20was%20the%20performance%20of%20Nvidia%20this%20year"

Let us check the logs and see what happened.

Step1 : LLM was called with the below request passing the message from the user and the system message. Along with that, the tools/functions available are also passed.

{ "messages" : [ { "role" : "system", "content" : "You are a polite stock advisor assistant who provides investment advice." }, { "role" : "user", "content" : "How was the performance of Nvidia this year" } ], "temperature" : 0.7, "tools" : [ { "type" : "function", "function" : { "name" : "getCompanyProfile", "description" : "Returns the company profile for the given stock symbols", "parameters" : { "type" : "object", "properties" : { "stockSymbols" : { "type" : "string", "description" : "Stock symbols separated by ," } }, "required" : [ "stockSymbols" ] } } } //Other tools/functions ] }

Step2 : LLM responds back to application with the set of tools/functions to be called along with parameters.

{ "message": { "role": "assistant", "content": null, "tool_calls": [ { "id": "call_x41R65yCDguc93xXBERn7LwB", "type": "function", "function": { "name": "getIncomeStatements", "arguments": "{\"stockSymbols\":\"NVDA\"}" } } ], } }

Step3 : Application calls the getIncomeStatements method with the parameter NVDA and returns the response back to LLM.

{ "messages" : [ { "role" : "system", "content" : "You are a polite stock advisor assistant who provides investment advice." }, { "role" : "user", "content" : "How was the performance of Nvidia this year" }, { "role" : "assistant", "tool_calls" : [ { "id" : "call_x41R65yCDguc93xXBERn7LwB", "type" : "function", "function" : { "name" : "getIncomeStatements", "arguments" : "{\"stockSymbols\":\"NVDA\"}" } } ] }, { "role" : "tool", "tool_call_id" : "call_x41R65yCDguc93xXBERn7LwB", "content" : //Response from the getIncomeStatements method } ], }

Step4: LLM responds back with results based on the tools/functions call output and the conversation history.

{ "message" : { "role" : "assistant", "content" : "Here's a summary of Nvidia's financial performance for the fiscal year 2024...." } }

Now let us enable @Tool annotation for createOrder, getAllOrders and getStockHoldingDetails methods in StockOrderService class. Here we are giving full access to LLM to call these methods.

Let us change our system prompt like below.

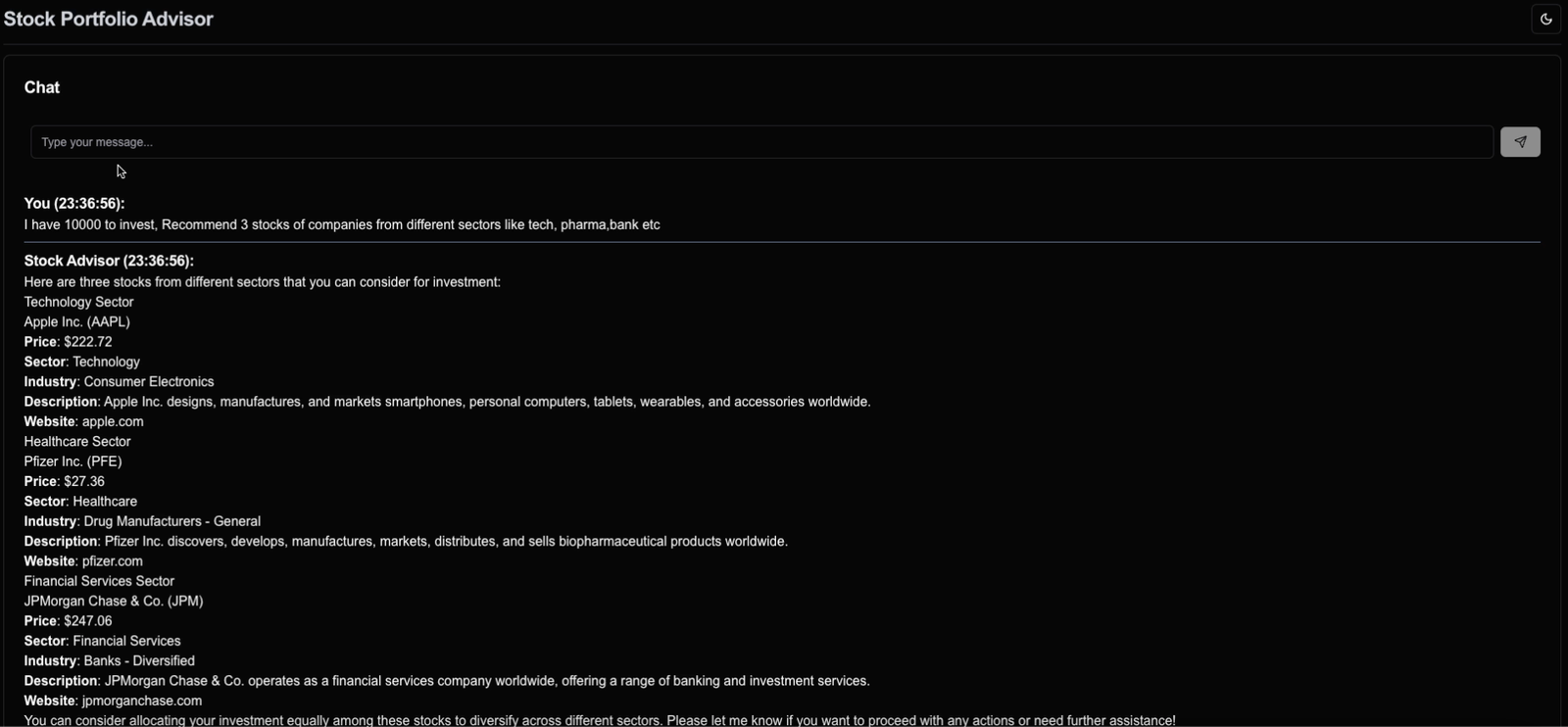

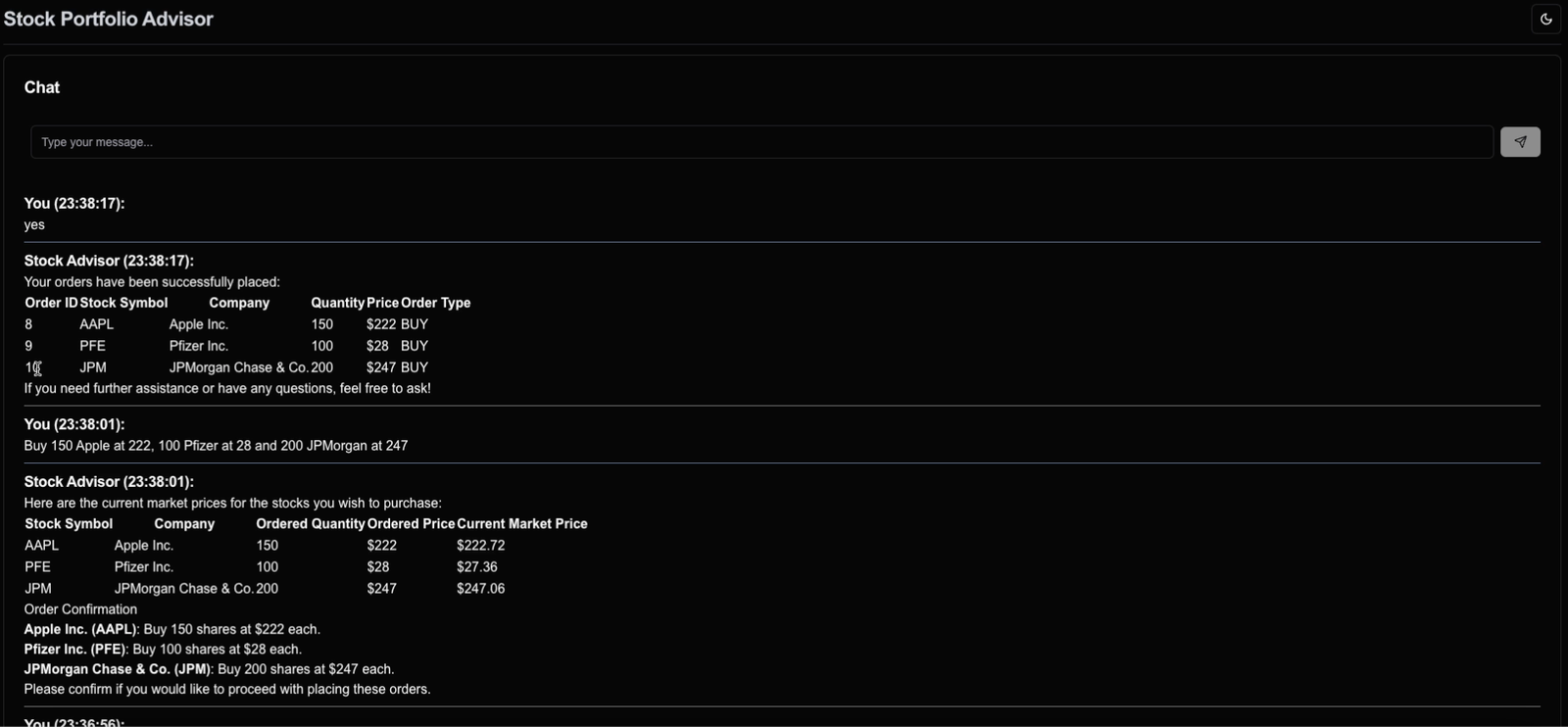

@AiService public interface StockAdvisorAssistant { @SystemMessage(""" You are a polite stock advisor assistant who provides advice based on the latest stock price, company information and financial results. When you are asked to create a stock order, ask for a confirmation before creating it. In the confirmation message, include the stock symbol, quantity, and price and current market price. All your responses should be in markdown format. When you are returning a list of items like position, orders, list of stocks etc, return them in a table format. """) String chat(String userMessage) ; }

We have created a simple UI using React and Next JS to interact with the Investment Advisor application. You can find the code here. A screenshot of testing done from the UI is below.

LangChain4j provides a fluent way for integrating Java applications with AI and blends seamlessly with Spring Boot.

To stay updated with the latest updates in Java and Spring follow us on linked in and medium.

You can find the code used in this blog here

This guide explores the features and usage of the RestClient introduced in Spring 6, providing a modern and fluent API for making HTTP requests. It demonstrates how to create and customize RestClient instances, make API calls, and handle responses effectively.

Learn how to set up a powerful, free AI code assistant in VS Code and IntelliJ IDEA using Ollama and Continue plugin. This guide will help you enhance your coding experience with local AI models, providing intelligent code suggestions without relying on cloud services.

Find the most popular YouTube creators in tech categories like AI, Java, JavaScript, Python, .NET, and developer conferences. Perfect for learning, inspiration, and staying updated with the best tech content.

Get instant AI-powered summaries of YouTube videos and websites. Save time while enhancing your learning experience.