Having started with Java before Java 8, I have experienced how Streams and Lambda expressions completely transformed the way we handle collections and data processing. They provide a powerful, functional-style approach to manipulate sequences of elements, making code more readable and efficient. In this guide we will explore the core concepts of Java Streams and Collectors with practical examples and cover all key features including Stream gatherers which was finalized in Java 24.

Related: Explore Stream Gatherers for advanced stream processing and Top Java Features 21-23 for the latest language improvements.

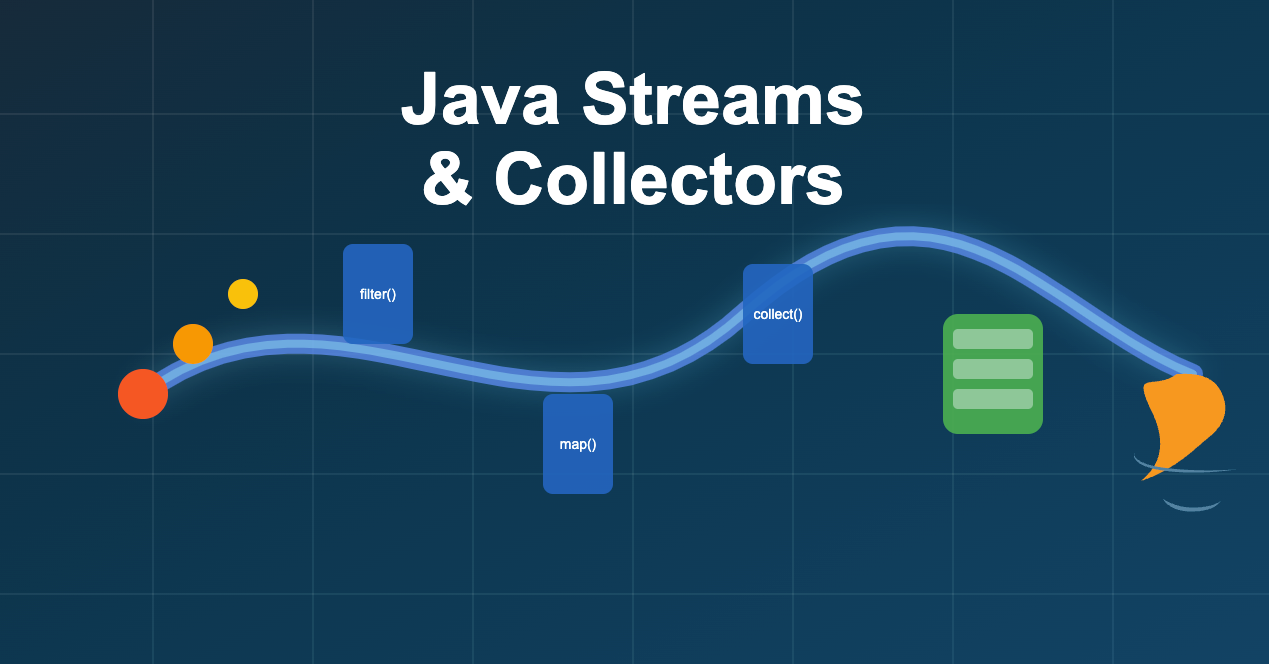

At a high level, below are the key components of the stream pipeline:

Streams can be created from various data sources, including collections, arrays, and I/O channels. Here are some common ways to create streams:

Collections (like List, Set, Map) are the most common source of streams. All collections in the Java Collection Framework provide a stream() method:

// Creating a stream from a List List<String> nameList = List.of("Alex", "Brian", "Charles"); Stream<String> nameStream = nameList.stream(); // Creating a stream from a Set Set<Integer> numberSet = Set.of(1, 2, 3, 4, 5); Stream<Integer> numberStream = numberSet.stream(); // Creating a stream from a Map's keys, values, or entries Map<String, Integer> ageMap = Map.of("Alex", 25, "Brian", 32, "Charles", 41); Stream<String> keyStream = ageMap.keySet().stream(); Stream<Integer> valueStream = ageMap.values().stream(); Stream<Map.Entry<String, Integer>> entryStream = ageMap.entrySet().stream();

Arrays can be converted to streams using static methods in the Arrays class:

// Creating a stream from an array String[] namesArray = {"Alex", "Brian", "Charles"}; Stream<String> arrayStream = Arrays.stream(namesArray); // For primitive arrays, specialized streams are returned int[] numbers = {1, 2, 3, 4, 5}; IntStream intStream = Arrays.stream(numbers);

Java NIO provides methods to create streams from files and other I/O sources:

// Stream of lines from a file try (Stream<String> lineStream = Files.lines(Path.of("data.txt"))) { lineStream.forEach(System.out::println); } // Stream of all files in a directory try (Stream<Path> pathStream = Files.list(Path.of("/test-directory"))) { pathStream.forEach(System.out::println); } // Walking a directory tree try (Stream<Path> walkStream = Files.walk(Path.of("/test-directory"))) { walkStream.filter(Files::isRegularFile) .forEach(System.out::println); }

Try with resources is used to ensure that the stream is closed after use, preventing resource leaks. This is probably one of the rare scenarios where we manually close the stream.

The Stream interface provides several static methods to create streams:

// Stream of specific elements Stream<String> elementStream = Stream.of("Alex", "Brian", "Charles"); // Empty stream Stream<String> emptyStream = Stream.empty(); // Infinite stream generated by a supplier Stream<UUID> uuidStream = Stream.generate(UUID::randomUUID).limit(5); // Infinite stream of sequential elements Stream<Integer> iterateStream = Stream.iterate(1, n -> n + 1).limit(10); // Iterate with predicate (Java 9+) Stream<Integer> predicateStream = Stream.iterate(1, n -> n < 10, n -> n + 2);

There are specialized stream types for primitives to avoid boxing overhead:

// IntStream, LongStream, DoubleStream IntStream intRangeStream = IntStream.range(1, 6); // 1, 2, 3, 4, 5 IntStream intRangeClosedStream = IntStream.rangeClosed(1, 5); // 1, 2, 3, 4, 5 // From Object stream to primitive stream record Person(String name, int age) {} List<Person> people = List.of(new Person("Alex", 25), new Person("Brian", 32), new Person("Charles", 41)); var average = people.stream() .mapToInt(Person::age) // Convert to IntStream .average().orElse(0.0) // Can invoke IntStream methods like average, sum, etc.

Multiple streams can be concatenated into a single stream:

Stream<String> stream1 = Stream.of("A", "B", "C"); Stream<String> stream2 = Stream.of("X", "Y", "Z"); Stream<String> combinedStream = Stream.concat(stream1, stream2); // A, B, C, X, Y, Z

We will learn about intermediate and terminal operations through some examples

We'll use a dataset of e-commerce orders throughout this tutorial. Here's our model:

record Customer( String id, String name, String email, LocalDate registrationDate, String tier // "standard", "premium", or "elite" ) {} record Product( String id, String name, String category, BigDecimal price ) {} record OrderItem( Product product, int quantity ) {} record Order( String id, Customer customer, LocalDate orderDate, List<OrderItem> items, String status // "placed", "shipped", "delivered", or "canceled" ) {}

Let's create some sample data to work with:

List<Product> products = List.of( new Product("P1", "iPhone 14", "Electronics", new BigDecimal("999.99")), new Product("P2", "MacBook Pro", "Electronics", new BigDecimal("1999.99")), new Product("P3", "Coffee Maker", "Appliances", new BigDecimal("89.99")), new Product("P4", "Running Shoes", "Sportswear", new BigDecimal("129.99")), new Product("P5", "Yoga Mat", "Sportswear", new BigDecimal("25.99")), new Product("P6", "Water Bottle", "Sportswear", new BigDecimal("12.99")), new Product("P7", "Wireless Earbuds", "Electronics", new BigDecimal("159.99")), new Product("P8", "Smart Watch", "Electronics", new BigDecimal("349.99")), new Product("P9", "Blender", "Appliances", new BigDecimal("79.99")), new Product("P10", "Desk Lamp", "Home", new BigDecimal("34.99")) ); List<Customer> customers = List.of( new Customer("C1", "John Smith", "john@example.com", LocalDate.of(2020, 1, 15), "elite"), new Customer("C2", "Emma Johnson", "emma@example.com", LocalDate.of(2021, 3, 20), "standard"), new Customer("C3", "Michael Brown", "michael@example.com", LocalDate.of(2019, 7, 5), "premium"), new Customer("C4", "Olivia Wilson", "olivia@example.com", LocalDate.of(2022, 2, 10), "standard"), new Customer("C5", "William Davis", "william@example.com", LocalDate.of(2020, 11, 25), "elite") ); List<Order> orders = List.of( new Order("O1", customers.get(0), LocalDate.of(2023, 3, 15), List.of( new OrderItem(products.get(0), 1), new OrderItem(products.get(7), 1) ), "delivered"), new Order("O2", customers.get(2), LocalDate.of(2023, 4, 2), List.of( new OrderItem(products.get(1), 1) ), "delivered"), new Order("O3", customers.get(1), LocalDate.of(2023, 4, 15), List.of( new OrderItem(products.get(2), 1), new OrderItem(products.get(9), 2) ), "shipped"), new Order("O4", customers.get(0), LocalDate.of(2023, 5, 1), List.of( new OrderItem(products.get(3), 1), new OrderItem(products.get(4), 1), new OrderItem(products.get(5), 2) ), "placed"), new Order("O5", customers.get(4), LocalDate.of(2023, 5, 5), List.of( new OrderItem(products.get(6), 1) ), "canceled"), new Order("O6", customers.get(3), LocalDate.of(2023, 5, 10), List.of( new OrderItem(products.get(8), 1), new OrderItem(products.get(9), 1) ), "placed"), new Order("O7", customers.get(2), LocalDate.of(2023, 5, 15), List.of( new OrderItem(products.get(0), 1), new OrderItem(products.get(1), 1) ), "placed"), new Order("O8", customers.get(0), LocalDate.of(2023, 5, 20), List.of( new OrderItem(products.get(7), 1) ), "placed") );

Scenario: Find all electronic products and create a list of their names.

List<String> electronicProductNames = products.stream() .filter(product -> product.category().equals("Electronics")) .map(Product::name) .collect(Collectors.toList()); System.out.println(electronicProductNames); // Output: [iPhone 14, MacBook Pro, Wireless Earbuds, Smart Watch]

What's happening:

stream(): Creates a stream from the products listfilter(): This is an intermediate operation that filters products based on the category. Here we are passing a predicate lambda function that checks if the product's category is "Electronics"map(): Another intermediate operation that transforms each Product into its name. We use a method reference Product::name to extract the name from each Product objectcollect(): This terminal operation gathers the results into a new List. Till we specify a terminal operation, the stream is not processed.Scenario: Find the first product in the "Sportswear" category that costs less than $20.

Optional<Product> affordableSportswearProduct = products.stream() .filter(product -> product.category().equals("Sportswear")) .filter(product -> product.price().compareTo(new BigDecimal("20")) < 0) .findFirst(); affordableSportswearProduct.ifPresent(product -> System.out.println("Affordable sportswear: " + product.name() + " - $" + product.price())); // Output: Affordable sportswear: Water Bottle - $12.99

What's happening:

filter() operations chain together to find sportswear products under $20. You can also combine them into a single filter using logical AND (&&).findFirst() is a terminal operation that returns an Optional containing the first matchifPresent() executes the provided lambda only if a match was foundOptional is a container object which may or may not contain a non-null value. It is generally used to avoid null checks and

NullPointerException. It is a good practice to use Optional in return types of methods that may not always return a value.

Scenario: Create a list of order summaries showing order ID and total item count.

record OrderSummary(String orderId, int itemCount) {} List<OrderSummary> orderSummaries = orders.stream() .map(order -> new OrderSummary( order.id(), order.items().stream().mapToInt(OrderItem::quantity).sum() )) .collect(Collectors.toList()); orderSummaries.forEach(System.out::println); // Output: // OrderSummary[orderId=O1, itemCount=2] // OrderSummary[orderId=O2, itemCount=1] // OrderSummary[orderId=O3, itemCount=3] // ... and so on

What's happening:

map() transforms each Order into an OrderSummaryScenario: Show all products sorted by price (lowest to highest).

List<Product> sortedProducts = products.stream() .sorted(Comparator.comparing(Product::price)) .collect(Collectors.toList()); sortedProducts.forEach(product -> System.out.println(product.name() + " - $" + product.price())); // Output: // Water Bottle - $12.99 // Yoga Mat - $25.99 // Desk Lamp - $34.99 // ... (remaining products in ascending price order)

What's happening:

sorted() with a Comparator arranges products by priceComparator.comparing() creates a comparator based on the Product::price method referenceYou can chain comparators to sort by multiple fields. For example,

Comparator.comparing(Product::category).thenComparing(Product::price)would first sort by category and then by price within each category.

Scenario: Implement a simple pagination for products, showing the second page with 3 products per page.

int pageSize = 3; int pageNumber = 1; // 0-based index, so this is the second page List<Product> paginatedProducts = products.stream() .skip(pageSize * pageNumber) .limit(pageSize) .collect(Collectors.toList()); paginatedProducts.forEach(product -> System.out.println(product.name())); // Output: // Running Shoes // Yoga Mat // Water Bottle

What's happening:

skip() bypasses the first page of itemslimit() takes only enough items to fill the requested pageScenario: Create a list of all products that have been ordered.

List<Product> orderedProducts = orders.stream() .flatMap(order -> order.items().stream()) .map(OrderItem::product) .distinct() .collect(Collectors.toList()); orderedProducts.forEach(product -> System.out.println(product.name())); // Output will show each product that appears in at least one order, without duplicates

What's happening:

flatMap() converts each order's list of items into a stream, then flattens all into a single streammap() extracts just the product from each order itemdistinct() removes duplicate products that were ordered multiple timesScenario: Calculate the total revenue from all orders.

BigDecimal totalRevenue = orders.stream() .flatMap(order -> order.items().stream()) .map(item -> item.product().price().multiply(BigDecimal.valueOf(item.quantity()))) .reduce(BigDecimal.ZERO, BigDecimal::add); System.out.println("Total Revenue: $" + totalRevenue); // Output: Total Revenue: $1349.98

What's happening:

flatMap() flattens the order items into a single streammap() calculates the revenue for each item by multiplying its price with the quantityreduce() aggregates all revenues into a single total, starting from BigDecimal.ZEROScenario: Check if any, all, or none of the products are in the "Electronics" category, and find any product over $1000.

boolean anyElectronics = products.stream() .anyMatch(product -> product.category().equals("Electronics")); boolean allExpensive = products.stream() .allMatch(product -> product.price().compareTo(new BigDecimal("100")) > 0); boolean noneHome = products.stream() .noneMatch(product -> product.category().equals("Toys")); Optional<Product> anyHighPriced = products.stream() .filter(product -> product.price().compareTo(new BigDecimal("1000")) > 0) .findAny(); System.out.println("Any electronics? " + anyElectronics); System.out.println("All products expensive? " + allExpensive); System.out.println("No toys? " + noneHome); anyHighPriced.ifPresent(p -> System.out.println("A high-priced product: " + p.name())); // Output: // Any electronics? true // All products expensive? false // No toys? true // A high-priced product: MacBook Pro

What's happening:

anyMatch, allMatch, and noneMatch are terminal operations that short-circuit as soon as the result is determined.findAny returns any matching element.Scenario: Trace the stream pipeline to debug filtering and mapping.

List<String> debuggedNames = products.stream() .peek(p -> System.out.println("Before filter: " + p.name())) .filter(product -> product.category().equals("Electronics")) .peek(p -> System.out.println("After filter: " + p.name())) .map(Product::name) .peek(name -> System.out.println("Mapped name: " + name)) .collect(Collectors.toList());

What's happening:

peek() is an intermediate operation for debugging or tracing elements as they flow through the pipeline.peek() for side effects in production code.Collectors are used to gather the results of stream operations into a collection or other data structure. Here are some common collectors:

Scenario: Create a comma-separated list of all product categories.

String categories = products.stream() .map(Product::category) .distinct() .sorted() .collect(Collectors.joining(", ")); System.out.println("Available categories: " + categories); // Output: Available categories: Appliances, Electronics, Home, Sportswear

What's happening:

map() extracts just the category from each productdistinct() removes duplicate categoriessorted() arranges them alphabeticallyCollectors.joining() combines all categories with the specified delimiterScenario: Calculate statistics for product prices.

DoubleSummaryStatistics priceStatistics = products.stream() .map(product -> product.price().doubleValue()) .collect(Collectors.summarizingDouble(price -> price)); System.out.println("Product price statistics:"); System.out.println("Count: " + priceStatistics.getCount()); System.out.println("Average: $" + String.format("%.2f", priceStatistics.getAverage())); System.out.println("Min: $" + String.format("%.2f", priceStatistics.getMin())); System.out.println("Max: $" + String.format("%.2f", priceStatistics.getMax())); System.out.println("Sum: $" + String.format("%.2f", priceStatistics.getSum())); // Output: // Product price statistics: // Count: 10 // Average: $388.39 // Min: $12.99 // Max: $1999.99 // Sum: $3883.89

What's happening:

map() converts BigDecimal prices to primitive doublessummarizingDouble() collector computes statistics as items are processedScenario: Collect product names into an unmodifiable list (Java 10+).

List<String> unmodifiableNames = products.stream() .map(Product::name) .collect(Collectors.toUnmodifiableList()); // unmodifiableNames.add("New Product"); // Throws UnsupportedOperationException

What's happening:

Collectors.toUnmodifiableList() returns an immutable list. Similar methods exist for Set and Map.If the ouput collection needed is immutable list, we can also use Stream.toList() which is a shorthand for Collectors.toUnmodifiableList().

List<String> unmodifiableNames = products.stream() .map(Product::name) .toList(); // unmodifiableNames.add("New Product"); // Throws UnsupportedOperationException

Scenario: Collect all product categories into a Set to remove duplicates.

Set<String> categorySet = products.stream() .map(Product::category) .collect(Collectors.toSet()); System.out.println(categorySet);

What's happening:

Collectors.toSet() collects elements into a Set, automatically removing duplicates.Scenario: Create a map of product names to their prices.

Map<String, BigDecimal> productPriceMap = products.stream() .collect(Collectors.toMap( Product::name, Product::price )); System.out.println(productPriceMap); // Output: {iPhone 14=999.99, MacBook Pro=1999.99, Coffee Maker=89.99, ...}

What's happening:

Collectors.toMap() creates a map where the first argument is the key (product name) and the second is the value (product price).java.lang.IllegalStateException will be thrown. To handle this, we can provide a merge function as the third argument to toMap().Scenario: Use filtering and mapping as part of a collector.

Map<String, List<String>> electronicsNamesByCategory = products.stream() .collect(Collectors.groupingBy( Product::category, Collectors.filtering( p -> p.category().equals("Electronics"), Collectors.mapping(Product::name, Collectors.toList()) ) )); System.out.println(electronicsNamesByCategory);

What's happening:

Collectors.filtering() allows filtering within a collector.Collectors.mapping() transforms elements during collection.Scenario: Safely collect products by price, merging names if prices are the same.

Map<BigDecimal, String> priceToNames = products.stream() .collect(Collectors.toMap( Product::price, Product::name, (name1, name2) -> name1 + ", " + name2 )); System.out.println(priceToNames);

What's happening:

toMap() is a merge function to handle duplicate keys.Scenario: Count products in each category and collect their names.

Map<String, Long> countByCategory = products.stream() .collect(Collectors.groupingBy(Product::category, Collectors.counting())); Map<String, List<String>> namesByCategory = products.stream() .collect(Collectors.groupingBy(Product::category, Collectors.mapping(Product::name, Collectors.toList())));

What's happening:

counting() and mapping() can be used with groupingBy for advanced grouping.Scenario: Divide products into "expensive" (>$100) and "affordable" categories.

Map<Boolean, List<Product>> pricePartition = products.stream() .collect(Collectors.partitioningBy( product -> product.price().compareTo(new BigDecimal("100")) > 0 )); System.out.println("Expensive products:"); pricePartition.get(true).forEach(p -> System.out.println("- " + p.name() + " ($" + p.price() + ")")); System.out.println("\nAffordable products:"); pricePartition.get(false).forEach(p -> System.out.println("- " + p.name() + " ($" + p.price() + ")"));

What's happening:

partitioningBy() separates products into two groups based on the boolean predicateScenario: Group all products by their category.

Map<String, List<Product>> productsByCategory = products.stream() .collect(Collectors.groupingBy(Product::category)); productsByCategory.forEach((category, prods) -> { System.out.println(category + ":"); prods.forEach(p -> System.out.println(" - " + p.name())); });

What's happening:

groupingBy() creates a map where each key is a category and each value is a list of products in that categoryScenario: Get the average price of products by category.

Map<String, Double> avgPriceByCategory = products.stream() .collect(Collectors.groupingBy( Product::category, Collectors.averagingDouble(p -> p.price().doubleValue()) )); avgPriceByCategory.forEach((category, avgPrice) -> System.out.println(category + ": $" + String.format("%.2f", avgPrice))); // Output: // Electronics: $877.49 // Appliances: $84.99 // Sportswear: $56.32 // Home: $34.99

What's happening:

groupingBy() with a downstream collector combines two operations:

Scenario: Analyze orders by customer tier and order status, showing order count and total items.

record OrderStats(long orderCount, int totalItems) {} Map<String, Map<String, OrderStats>> orderAnalysisByTierAndStatus = orders.stream() .collect(Collectors.groupingBy( order -> order.customer().tier(), Collectors.groupingBy( Order::status, Collectors.collectingAndThen( Collectors.toList(), ordersList -> new OrderStats( ordersList.size(), ordersList.stream() .flatMap(order -> order.items().stream()) .mapToInt(OrderItem::quantity) .sum() ) ) ) )); orderAnalysisByTierAndStatus.forEach((tier, statusMap) -> { System.out.println("Customer Tier: " + tier); statusMap.forEach((status, stats) -> { System.out.println(" Status: " + status); System.out.println(" Order Count: " + stats.orderCount()); System.out.println(" Total Items: " + stats.totalItems()); }); });

What's happening:

groupingBy() separates orders by customer tiergroupingBy() further separates by order statuscollectingAndThen() transforms the collected list into an OrderStats objectIf the built-in collectors do not meet your needs, you can create a custom collector. This is useful for complex aggregations or when you need to maintain state across multiple elements.

Scenario: Build a custom collector to analyze orders by customer tier, showing average order value and total items.

record TierAnalysis(int orderCount, BigDecimal totalRevenue, long totalItems) { double getAverageOrderValue() { return orderCount == 0 ? 0 : totalRevenue.doubleValue() / orderCount; } TierAnalysis combine(TierAnalysis other) { return new TierAnalysis( this.orderCount + other.orderCount, this.totalRevenue.add(other.totalRevenue), this.totalItems + other.totalItems ); } } class TierAnalysisCollector implements Collector<Order, TierAnalysis, TierAnalysis> { @Override public Supplier<TierAnalysis> supplier() { return () -> new TierAnalysis(0, BigDecimal.ZERO, 0); } @Override public BiConsumer<TierAnalysis, Order> accumulator() { return (analysis, order) -> { BigDecimal orderTotal = order.items().stream() .map(item -> item.product().price() .multiply(BigDecimal.valueOf(item.quantity()))) .reduce(BigDecimal.ZERO, BigDecimal::add); long itemCount = order.items().stream() .mapToLong(OrderItem::quantity) .sum(); analysis = new TierAnalysis( analysis.orderCount() + 1, analysis.totalRevenue().add(orderTotal), analysis.totalItems() + itemCount ); }; } @Override public BinaryOperator<TierAnalysis> combiner() { return TierAnalysis::combine; } @Override public Function<TierAnalysis, TierAnalysis> finisher() { return Function.identity(); } @Override public Set<Characteristics> characteristics() { return Collections.unmodifiableSet(EnumSet.of( Characteristics.IDENTITY_FINISH )); } } // Usage of the custom collector Map<String, TierAnalysis> tierAnalysis = orders.stream() .collect(Collectors.groupingBy( order -> order.customer().tier(), () -> new TierAnalysisCollector() )); tierAnalysis.forEach((tier, analysis) -> { System.out.println("Tier: " + tier); System.out.println(" Orders: " + analysis.orderCount()); System.out.println(" Total Revenue: $" + analysis.totalRevenue()); System.out.println(" Total Items: " + analysis.totalItems()); System.out.printf(" Avg. Order Value: $%.2f%n", analysis.getAverageOrderValue()); }); // Output depends on the data, but might look like: // Tier: elite // Orders: 3 // Total Revenue: $1479.97 // Total Items: 4 // Avg. Order Value: $493.32

What's happening:

Parallel streams allow for concurrent processing of data, which can improve performance on large datasets. We can use parallel streams by calling parallelStream() instead of stream().

Internally, parallel streams use the ForkJoinPool to split the workload across multiple threads. This can lead to significant performance improvements for CPU-bound tasks.

Scenario: Use parallel streams to find all electronics products ordered by premium customers.

List<Product> premiumElectronics = orders.parallelStream() .filter(order -> order.customer().tier().equals("premium")) .flatMap(order -> order.items().stream()) .map(OrderItem::product) .filter(product -> product.category().equals("Electronics")) .distinct() .collect(Collectors.toList()); System.out.println("Electronics ordered by premium customers:"); premiumElectronics.forEach(p -> System.out.println("- " + p.name())); // Output depends on the data, but might look like: // Electronics ordered by premium customers: // - iPhone 14 // - MacBook Pro

What's happening:

parallelStream() processes the data in parallel, potentially using multiple CPU coresdistinct() ensures no duplicate products in the resultParallel streams can improve performance but aren't always the best choice. Use them when:

Avoid parallel streams when:

We need to ensure we are not mutating data while using parallel streams since that will lead to unpredictable results due to potential race conditions. Also since different threads will process elements, things like trace id normally shared through thread local variables may not work as expected.

The teeing() collector allows you to combine the results of two separate collectors into a single result. This is useful when you want to perform two different aggregations on the same data.

Scenario: Calculate both the total revenue and count of products sold in a single stream operation.

record SalesStatistics(BigDecimal totalRevenue, long totalProductsSold) {} SalesStatistics salesStats = orders.stream() .flatMap(order -> order.items().stream()) .collect(Collectors.teeing( Collectors.mapping( item -> item.product().price().multiply(BigDecimal.valueOf(item.quantity())), Collectors.reducing(BigDecimal.ZERO, BigDecimal::add) ), Collectors.summingLong(OrderItem::quantity), SalesStatistics::new )); System.out.println("Total Revenue: $" + salesStats.totalRevenue()); System.out.println("Total Products Sold: " + salesStats.totalProductsSold()); // Output: // Total Revenue: $4883.89 // Total Products Sold: 12

What's happening:

teeing() collector splits the stream into two separate collectorsWhile Streams and Collectors have provided powerful data processing capabilities since Java 8, Java 22 introduced a preview feature called Stream Gatherers which takes this to the next level.

Stream Gatherers allow developers to create custom intermediate operations for streams, enabling more complex data transformations that were previously difficult to express.

record Employee(String name, int age, String department) {} List<Employee> employees = getEmployees(); // Using a fixed window gatherer to process employees in pairs var employeePairs = employees.stream() .filter(employee -> employee.department().equals("Engineering")) .map(Employee::name) .gather(Gatherers.windowFixed(2)) // Group names in pairs .toList(); // Result: [[Alice, Mary], [John, Ramesh], [Jen]]

A Gatherer consists of:

Java 22 includes several built-in gatherers:

Gatherers.fold(): Combines all stream elements into a single resultGatherers.scan(): Performs incremental accumulation and produces intermediate resultsGatherers.windowFixed(): Groups elements into fixed-size windowsGatherers.windowSliding(): Creates overlapping windows of elementsGatherers.mapConcurrent(): Maps elements concurrently with controlled parallelismFor more details on Stream Gatherers, including implementation examples and advanced usage, check out our dedicated blog post: Stream Gatherers using Java 22.

Avoid side effects in stream operations. Don't modify external state in lambdas as this breaks the functional programming model and can lead to unpredictable behavior.

Keep pipelines short and readable. Long chains of operations can be hard to follow. Break them into smaller methods if necessary.

Prefer method references over lambdas when possible for cleaner, more readable code: stream.map(Person::getName) instead of stream.map(person -> person.getName()).

Use specialized primitive streams (IntStream, LongStream, DoubleStream) when working with primitives to avoid boxing/unboxing overhead.

Choose the appropriate terminal operation for your needs - don't use collect() when a simpler operation like findFirst() or anyMatch() will do.

Be careful with stateful operations like sorted(), distinct(), and limit() as they can negatively impact performance with large datasets.

Use parallel streams judiciously. They aren't always faster and can sometimes be slower due to overhead. Benchmark your specific use case before committing to parallel processing.

Use peek() for debugging only, not for performing actual operations. It's intended for observation, not for changing state.

Remember that streams can be consumed only once. After a terminal operation is executed, the stream is closed and cannot be reused.

Leverage built-in collectors before implementing custom ones. The Collectors class provides many powerful implementations for common use cases.

Handle infinite streams carefully by always including limiting operations like limit() or short-circuiting operations like findFirst() to prevent infinite processing.

While Java Streams are powerful, there are scenarios where traditional loops may be more appropriate:

Java Streams and Collectors provide a powerful, declarative approach to data processing that can significantly improve code readability and maintainability. By understanding the various operations and collectors available, you can solve complex data transformation problems with elegant, concise code.

This blog introduces Stream Gatherers, a new feature in Java 22, which allows developers to add custom intermediate operations to stream processing. It explains how to create and use Stream Gatherers to enhance data transformation capabilities in Java streams.

Explore the top 5 features released from Java 21 to Java 23, including Virtual Threads, Pattern Matching with Records and Sealed Classes, Structured Concurrency, Scoped Values, and Stream Gatherers. Learn how these features can enhance your Java applications.

Find the most popular YouTube creators in tech categories like AI, Java, JavaScript, Python, .NET, and developer conferences. Perfect for learning, inspiration, and staying updated with the best tech content.

Get instant AI-powered summaries of YouTube videos and websites. Save time while enhancing your learning experience.